Autonomous driving with LLMs, VLMs, and MLLMs

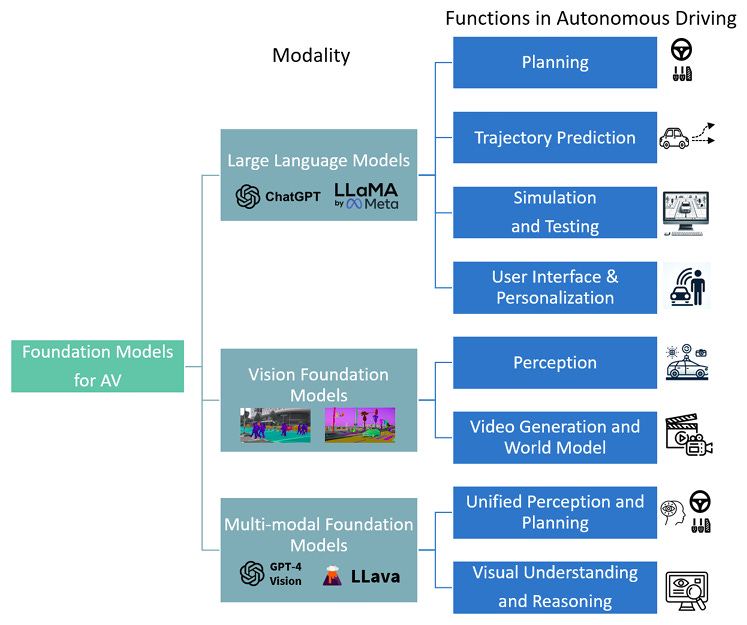

Discussing the application of Large Language/Vision Models in autonomous driving and the most significant developments and approaches.

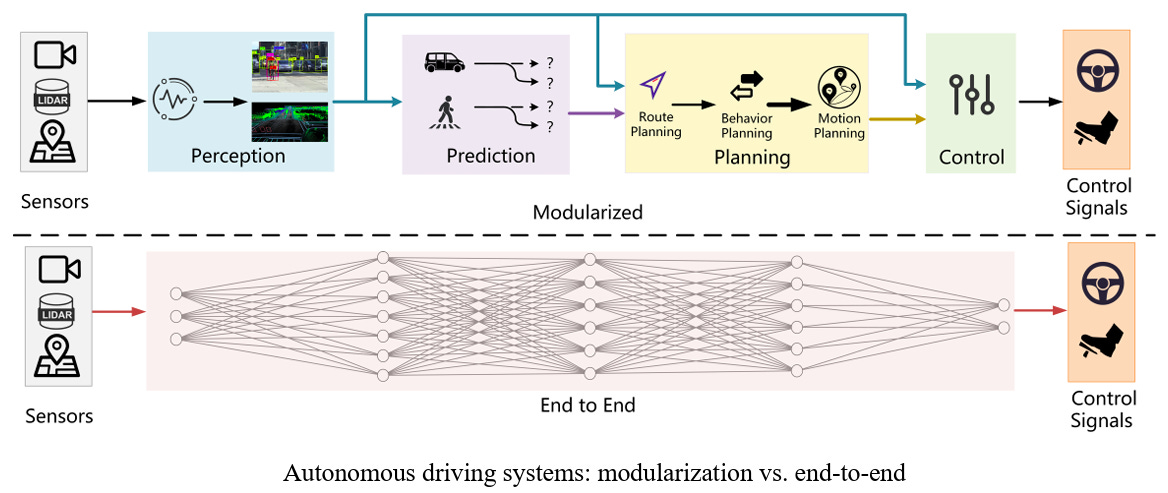

The implementation of large models in autonomous driving has improved the capabilities of vehicles to drive and understand their surroundings, by overcoming many challenges faced by the two older approaches, modular pipeline and end-to-end, some of them are generalization, interpretability, causal confusion, and robustness, in more detail and to address each of the challenges that have faced the past approaches:

The modularized pipeline challenges:

Difficulty in integrating the different parts of the system, which mainly consists of four separate parts.

Unavoidable gaps between the system modules, in terms of time delays and accuracy of results, result in bigger problems and lack of performance going deeper into the system.

End-to-end solution challenges:

The information channels from one end of the system to the other are very long which results in a waste of some information and delay.

The network structure is so complex which makes it difficult to debug or optimize.

The information and driving performance of the networks lacks the human driver’s common sense.

Explainability: where the results, actions and states of the algorithm are unpredictable and understandable by humans in many scenarios.

Human-vehicle interaction: The human experience of interacting with the vehicle and the stability of the self-driving algorithm are lacking and can be improved greatly.

Data scarcity: the recording of road data and creating datasets for autonomous vehicles with the correct type of annotation has always been not an easy process, which makes training models with higher performance more challenging.

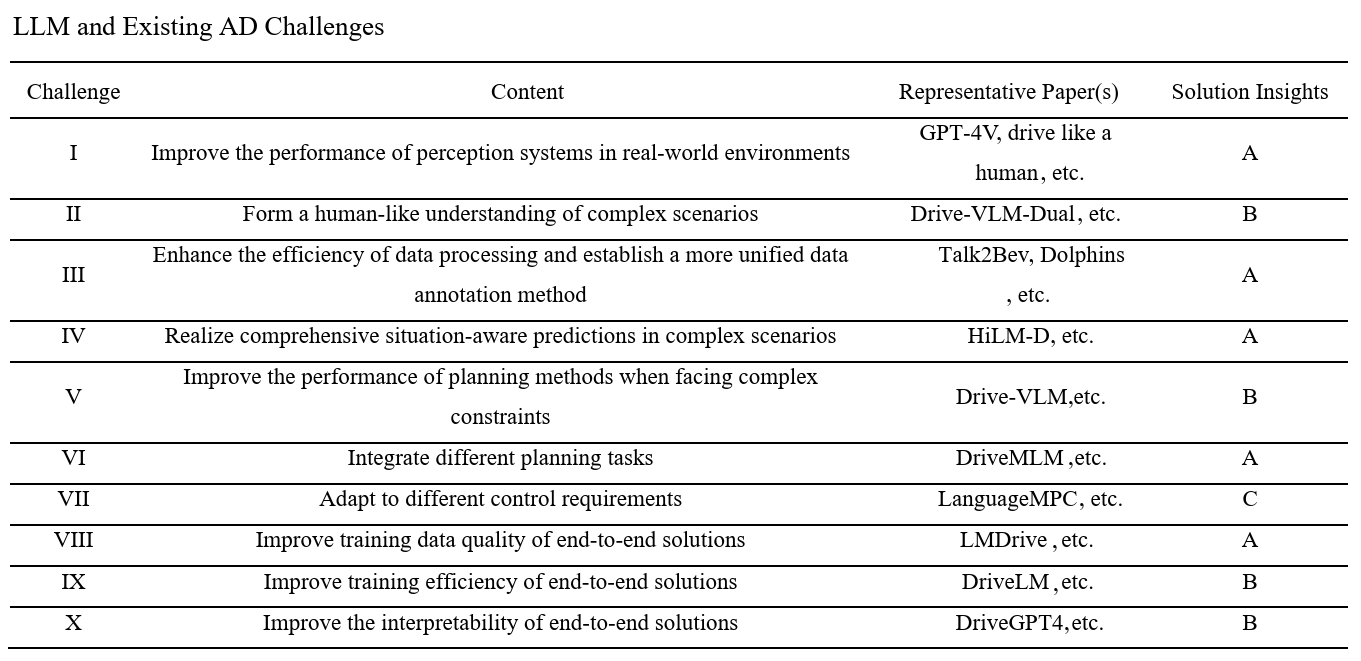

Some of the research in autonomous driving that has been done to address these challenges using different types of large models is mentioned in the following table:

Some of the tasks and use cases that are delivered by large models are to be discussed next, in a format that summarizes the content column in the table above in addition to exploring some of the models from research as examples:

LLM use cases:

In the graph at the top of the blog there are some of the functions that are done by Large models, as follows:

Perception: by demonstrating great performance and capabilities and delivering tasks such as object referring and tracking, and open-vocabulary traffic environment perception, besides having improved capability in perception when data is scarce by relying on their few-shot learning characteristics.

Planning: In this field, the large models have shown great capabilities in open-world reasoning, and there are two types of large models:

Fine-tuning pre-trained models: where there are many approaches and datasets to use for fine-tuning a large model to improve its performance in autonomous driving, some of the approaches are:

Parameter-efficient fine-tuning, such as low-rank adaptation.

Prompt tuning. such as learnable continuous vectors or soft prompts

Instruction tuning.

Reinforcement learning from human feedback.

Prompt engineering: Some methods tried to use the full reasoning potential with carefully engineered prompts, for example using the chain-of-thought method to guide the reasoning process of the large model and get the best possible and accurate result.

Question answering: This use case improves the explainability of the model actions and provides a better human-vehicle-interaction, and understandability for the user, which could result in improved dataset generation and the possibility of optimizing the model.

Generation: large models can use their generative capability to create videos for driving scenarios, and caption them, besides generating question-answer pairs or descriptions of driving scenes.

Example large models:

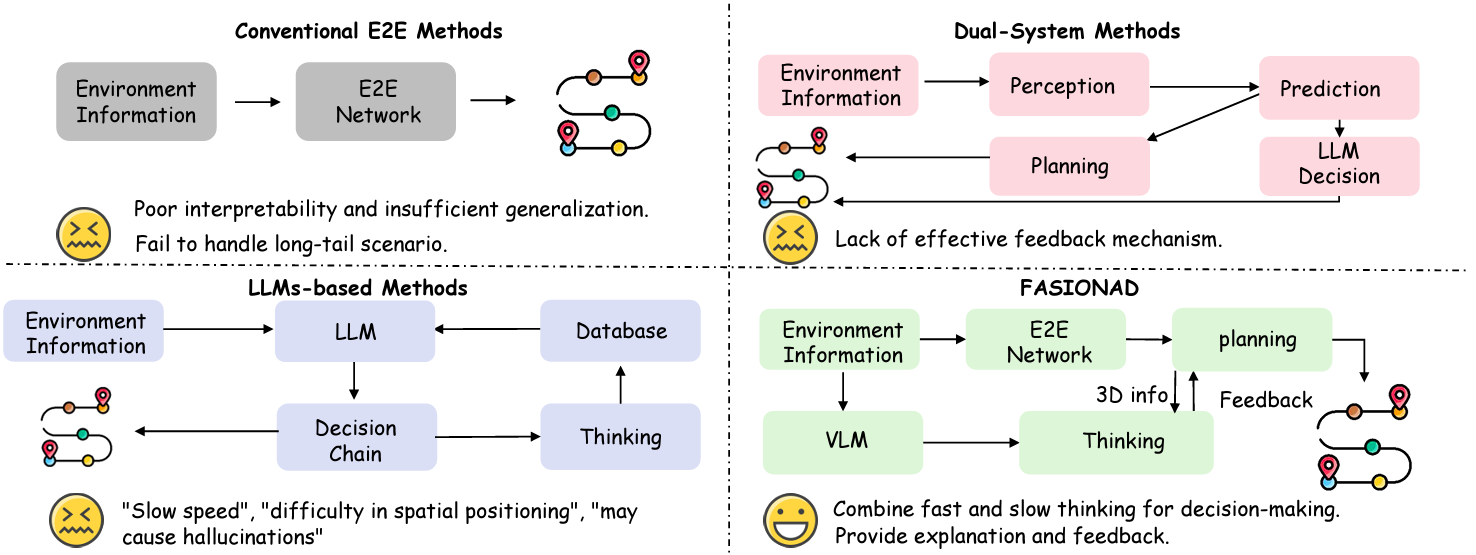

FASIONAD FAst and Slow fusION Thinking Systems: an adaptive feedback network able to process the common driving events in addition to the rare ones, and make decisions using a fast thinking network, and a second slow thinking one, and a switch between them for a complex task it uses slow thinking, while for a common simple task it uses fast thinking. Making use of LLMs and VLMs in the slow thinking approach. To deliver high performance while maintaining safety. The next figure shows the general structure of the model and the difference between it and other approaches

VLM-AD: Instead of using the trajectory planning alone at training time, it uses a VLM as a teacher to provide extra supervision, which will enhance the features, and the VLM is for training only not inference, because it will increase the delay time of the model in run-time.

as shown in the graph the information from the VLM gets utilized by the auxiliary heads which is a CLIP network in this case, where they receive the ego information (the vehicle position, speed, direction..etc) from the trajectory model and align them with the language instructions from the LLM to get an improved decision.

DriveGPT4: is a multi-modal LLM capable of processing multi-frame video inputs and textual queries, to deliver the list of functionalities:

Provide pertinently reasoning.

Addresses a wide range of user questions.

Predicts low-level end-to-end control commands.

It works by taking input from a front-view camera and producing control commands as output, besides that the human users can converse with the model.

The model consists of the following parts:

Video tokenizer: consists of a visual encoder (CLIP) and a projector, converts video frames into text domain tokens.

LLaMA2 as LLM to do the reasoning and the analyzing for the tokens.

De-tokenizer inspired from RT-2, to convert the output of the LLM to control commands understandable by the vehicle, and natural language text understandable by the user.

The model architecture is as shown in the graph below:

It would be important to mention that the training is on two stages:

The pre-training stage focused on video-text alignment, using CC3M and WebVid-2M datasets, where only the projector is trained and the encoder and LLM weights are fixed.

The mix-finetuning stage is aimed at training the model for question answering, and the part trained is the LLM alongside the projector.

LanguageMPC: implements an LLM to function as the brain of the autonomous driving system. Which makes the high-level decisions and creates a low-level mathematical representation to be input into a Model-Predictive-Controller (MPC). another significant detail about this system is that it presents a chain-of-thought framework for driving scenarios, the following graph shows the model in detail:

The steps the system works by are:

The LLM gather information.

Reason through a thought process.

Render judgments.

The system in detail from left to right in the (a) part of the figure, specifically in the block after the environment block, the GPT model will be:

Identifying the vehicles requiring attention in the first branch.

Evaluate the situation

Offer action guidance

Transform the three types of information above from text to mathematics. That are:

The observation matrix contains information about the surroundings of the vehicle.

The weight matrix defines a situation (acceleration and steering) that a vehicle could be in.

Action bias is the value of the steering or acceleration.

In a few words, the MPC controller is an optimal control technique in which the calculated control actions minimize a cost function for a dynamical system. For more details about the process of the control check the link in the references.

Other examples such as WiseAD, CarLLaVA, and LMdrive each give a different approach and solution for a number of problems.

Please feel free to reach out on LinkedIn and X. or ask any questions and add comments

Further reading:

Challenges and Solutions of Autonomous Driving Approaches: A Review: https://ssrn.com/abstract=5239931

Autonomous driving: Modular pipeline Vs. End-to-end and LLMs: https://medium.com/@samiratra95/autonomous-driving-modular-pipeline-vs-end-to-end-and-llms-642ca7f4ef89

References:

[1] Zhou, X., Liu, M., Yurtsever, E., Zagar, B. L., Zimmer, W., Cao, H., & Knoll, A. C. (2024). Vision language models in autonomous driving: A survey and outlook. IEEE Transactions on Intelligent Vehicles.

[2] Zhu, Y., Wang, S., Zhong, W., Shen, N., Li, Y., Wang, S., ... & Li, L. (2024). Will Large Language Models be a Panacea to Autonomous Driving?. arXiv preprint arXiv:2409.14165.

[3] Yang Z, Jia X, Li H, Yan J. Llm4drive: A survey of large language models for autonomous driving. arXiv preprint arXiv:2311.01043. 2023 Nov 2.

[4] Qian, K., Ma, Z., He, Y., Luo, Z., Shi, T., Zhu, T., ... & Matsumaru, T. (2024). FASIONAD: FAst and Slow FusION Thinking Systems for Human-Like Autonomous Driving with Adaptive Feedback. arXiv preprint arXiv:2411.18013.

[5] Xu, Y., Hu, Y., Zhang, Z., Meyer, G. P., Mustikovela, S. K., Srinivasa, S., ... & Huang, X. (2024). Vlm-ad: End-to-end autonomous driving through vision-language model supervision. arXiv preprint arXiv:2412.14446.

[6] Xu, Z., Zhang, Y., Xie, E., Zhao, Z., Guo, Y., Wong, K. Y. K., ... & Zhao, H. (2024). Drivegpt4: Interpretable end-to-end autonomous driving via large language model. IEEE Robotics and Automation Letters.

[7] Sha, H., Mu, Y., Jiang, Y., Chen, L., Xu, C., Luo, P., ... & Ding, M. (2023). Languagempc: Large language models as decision makers for autonomous driving. arXiv preprint arXiv:2310.03026.

[8] https://www.mathworks.com/help/mpc/gs/what-is-mpc.html